How to market to robots and win visibility

You’ve just published an amazing piece of content to your website. It’s full of sharp insights. It’s perfect for your audience. It’s exactly the kind of content that should have your clients queuing up.

But here’s what actually happens… Nothing!

No traffic. No enquiries. No impact.

Not because it’s bad content. But because you forgot about your first reader. The one that decides if a human audience will ever see it.

The invisible filter nobody talks about

Every piece of digital content you create must impress an audience you never see.

Machines. Algorithms. Robots.

These systems scan, rank and sort your content before any human gets close. They’re not checking if it’s clever or creative. They’re asking different questions entirely.

Questions like, can I crawl this? Should I index it? Does it match what people are searching for? Can I trust the source?

If you miss these requirements, your content will fade into digital darkness. It doesn’t matter how brilliant it is.

Your new reality check

Here’s the journey your digital content actually takes.

You hit publish.

The search engine bots arrive… maybe.

They crawl your page… possibly.

They understand your content… hopefully.

They deem it worthy of indexing… fingers crossed.

They rank it against your competitors… good luck.

Then, and only then, a human audience might find it.

Each step is a gate. Each gate has a gatekeeper. And those gatekeepers are machines following rules you probably don’t know.

Traditional marketing says ‘know your audience’. But that advice assumes your audience can actually find you. What you need to do, before anything else, is market to robots. Our in-house SEO experts have been banging the ‘Marketing to Robots’ drum for many years. After all, it’s the robots who see your content first. They decide whether it rises to the top of the search results, or sinks to the darkest depths of the internet, never to see the light of day.

That’s why we’ve written this guide. It’s here to help you think like a robot, to give your content the best chance of getting discovered, crawled, ranked and indexed in Google and the other search engines.

Over five parts, you’ll learn:

- Part One – How the algorithms decide which content gets seen

- Part Two – The technical fixes that will unlock your content’s visibility

- Part Three – How to create content that the machines understand

- Part Four – The trust signals that boost your website’s authority

- Part Five – Strategies to futureproof your website for AI-powered search

No fluff. No theory. Just practical guidance for marketing strategists who need results. So, if:

- You’re tired of your best content being ignored in search

- Your competitors have worse content, but it outranks yours

- Your website’s traffic feels random and unpredictable

- You’re tired of guessing what Google wants

Then this guide’s for you. Ready to get started?

Why algorithms decide who sees your content

Part 1: The gatekeepers

Thirty years ago, you could build visibility with decent ads in the Yellow Pages and your local newspapers, and some positive word-of-mouth.

Twenty years ago, having a website, no matter how basic, would help you stand out.

Ten years ago, a well-designed website with solid SEO did the job.

Today? You’re not competing for human attention anymore. You’re competing for approval from the search engine algorithms. These sophisticated systems are the new power brokers of online visibility. They’re like online bouncers guarding every digital door. And they’re very selective about who they let in.

Google’s crawlers decide if your page is worth indexing. Meta’s algorithm chooses who sees your posts in their Facebook and Instagram feeds. LinkedIn’s bots determine if your article reaches the decision-makers in and beyond your network. And YouTube’s recommendations engine controls the fate of your videos.

The decisions the algorithms make aren’t suggestions. They’re verdicts. And they’re made in milliseconds, based on signals many businesses don’t even know exist.

How the robots judge your content

Let’s forget about writing compelling headlines and emotional hooks for a second. The robots don’t care about clever wordplay. They care about:

- Technical signals – Can they access your content? Is your site fast, mobile-friendly and secure?

- Semantic signals – Do they understand what you’re talking about? Are you using language that matches search intent?

- Authority signals – Should they trust you? What’s your track record? Does anyone else vouch for you?

- Behavioural signals – When users do find your website, do they stick around? Do they bounce straight back to the search results?

Each algorithm weighs these differently. Google prizes experience, expertise, authoritativeness and trustworthiness (more on this later). Meta (Facebook) favours engagement. LinkedIn looks for professional relevance.

But they all share one trait. They decide first. Humans see second.

The cost of playing by yesterday’s rules

Many businesses create content for humans first, assuming visibility will follow. It won’t.

While writing human-centric content is good practice, especially if you’re trying to follow Google’s E-E-A-T guidelines (more on these later), if you don’t write for the robots first, it will fail.

You can craft the perfect landing page with compelling copy, stunning design and an irresistible offer. But if the robots can’t crawl it properly, it might as well not exist.

You can write the most insightful industry analysis ever published. But without a proper semantic structure, Google won’t know what it’s about.

And you can build the most innovative product pages in your sector. But with poor technical foundations, they’ll never surface in search.

Creating content that consistently ranks isn’t about gaming the system. It’s about speaking the language of the systems that control visibility.

What this means for your content strategy

Here are three brutal truths:

- Robots are your first audience. Every piece of content you create needs to work for the algorithms before it can work for humans.

- Discovery beats persuasion. The world’s best sales copy is worthless if nobody finds it.

- Technical excellence is non-negotiable. Speed, structure and crawlability aren’t nice-to-haves. They’re essential for your content to thrive in an oversaturated online arena.

This shift demands a fundamental rethink of your content strategy. It’s not about replacing human-centric content creation but adding machine-centric architecture underneath it.

Your first action step

It’s time to give your website an honest visibility audit. Pick your three best-performing pages. The ones that should be driving leads. Check:

- Google Search Console: Are they indexed? What queries do they rank for?

- Site speed tools: How fast do they load?

- Mobile testing: Do they work on every device?

- Search results: Where do they actually appear? Who’s beating you?

The results might sting. But they’ll show you exactly where the robots are blocking your visibility.

And once you know what the gatekeepers want, you can start giving it to them without sacrificing what makes your content brilliant for humans.

Aligning your strategy with the search engine bots

Part 2: Designing for discovery

Your website is like a maze. The robots are like blind mice trying to find the cheese. Yet many businesses make the maze harder than it needs to be, then wonder why their content never gets found.

Getting your content found doesn’t happen by magic, although sometimes, it might seem that way. It’s a process.

The bots arrive at your site.

They attempt to crawl its pages.

They try to understand the content.

Based on what they see, they decide what to index. Once indexed, they determine where it should rank in the search engine results pages (SERPs) – the higher, the better.

Break any link in this chain, and it’s game over. Yet many websites break it in multiple places through simple ignorance of how the algorithms actually work. Here’s what you need to know:

Crawlability – can the robots actually reach your content?

Sounds obvious, right? It isn’t.

Your brilliant blog post might be:

- Blocked by robots.txt (yes, people still mess this up)

- Hidden behind JavaScript that the bots can’t execute

- Buried so deep in your site’s structure that the crawl budget (the number of pages a bot is willing to crawl on a website within a specific timeframe) runs out

- Loading so slowly that bots give up and leave

So, check your robots.txt file right now. Seriously. You’ll be amazed at how many sites accidentally block their best content.

Indexability: Permission to appear in search

Finding your content isn’t enough. The bots need permission to show it in their search results. Yet just one misplaced tag can make your entire product catalogue invisible. And you’d never know unless you looked. Some of the things to check for here include:

- Accidental noindex tags (left over from development)

- Canonical tags pointing to the wrong page

- Duplicate content confusing the search engines

- Parameters creating infinite URL variations

Structured data: speaking the robots’ language

Schema markup is like Google Translate for websites. It explicitly tells the bots what your content means.

This is where many websites miss massive opportunities.

A properly marked-up product page (This is a product. It costs £99. It’s in stock. It has 47 five-star reviews) helps the bots understand what the page is about.

Without schema, the bots have to guess. And more often than not, they guess wrong.

Your next action step

Stop guessing. Start knowing. Fire up Screaming Frog, SEMRush or Google Search Console and look for issues like:

- 404 errors killing your user journeys

- Redirect chains that waste crawl budget

- Orphan pages hiding your best content

- Missing, duplicate or irrelevant meta descriptions and title tags

- Duplicate content that competes with itself

Once you’ve got a list of issues, fix the most obvious problems first. Broken links. Missing titles or metadata. Basic stuff that shouldn’t be broken.

Next, tackle your site’s structure. Simplify the URLs. Create a logical hierarchy. Build clear user journeys from the homepage to every important page.

Finally, add schema markup. Start with the basics – organisation, products, articles. Google’s Schema Markup Testing Tool will help you check your work.

Technical SEO isn’t sexy. But it’s the foundation upon which everything else is built. Get it right, and it will give every piece of content you publish a fighting chance of getting indexed. Get it wrong, and you’re just hitting publish and hoping the robots will read it. The choice is yours.

Optimising your website for semantic understanding

Part 3: Content that speaks machine

Remember when SEO meant picking a keyword and hammering it into submission?

Those days are long gone. They ended when Google started deploying natural language processing at scale.

Today, while keywords are still an important element of good SEO, they’re no longer the content kings they once were. Stuffing ‘cheap Worcestershire plumber’ 17 times into your page won’t fool anyone anymore. Especially not Google’s new AI-powered algorithms, which read content like humans would.

The game has changed. The search engines now understand context, meaning and relationships between concepts. They don’t just match strings of text anymore. They interpret ideas.

You might think that the AI tools that have come to the fore in the past couple of years – Chat GPT, Google Gemini and CoPilot – ushered in this change. But the roots stretch back much further.

BERT, Google’s natural language processing model, arrived in 2018 and changed everything. Suddenly, Google’s robots could understand prepositions, context and the subtle differences between ‘bank’ (financial) and ‘bank’ (riverside). MUM – Google’s multimodal unified model – followed, bringing the ability to interpret complex queries across different formats.

These technologies laid the groundwork for the advanced, ubiquitous AI large language models in use today. They represented the start of a fundamental shift in how machines read content.

Today, Google’s algorithms now process language more like humans do, understanding the concepts, ideas and intent behind the words.

It means your old keyword strategy is probably holding you back. Worse, it might be actively hurting your website. Because content written for keyword density now reads like it was written for keyword density.

Mechanical. Repetitive. Unhuman.

Entity-driven SEO: Writing for concepts, not keywords

Modern search engines think in ‘entities’, that is, anything with a distinct meaning, such as a person, place, thing or concept. So, if you write about ‘London’, Google knows you mean the capital city of England, with all its associated attributes, history and connections.

Google’s web of understanding means you need to write with semantic richness. If you’re creating content about coffee machines, Google expects to see related entities: espresso, grinding, pressure, beans, barista, extraction. Not because you’re keyword stuffing, but because that’s how humans naturally discuss coffee machines.

The magic happens when you connect the different entities in meaningful ways. For example, if you’re writing about sustainable coffee, mention fair trade, carbon footprint, shade-grown and Rainforest Alliance. Google recognises these relationships and uses them to understand your content’s depth.

Formatting for robot comprehension

Robots parse information differently than humans. They need structure, clarity and logical organisation. This is where good user experience comes into play.

It’s not about dumbing down your content. It’s about making it scannable for both audiences.

Using the correct headers creates hierarchy. It’s how the algorithms understand your content’s structure. Use the h1 tag to announce your main topic, h2s to break down the major sections, h3s for subsections, and so on.

Your paragraphs should tackle one idea each. Long, meandering paragraphs confuse machine parsing. Short, focused paragraphs improve comprehension for both bots and humans.

Lists, tables and clear formatting also create machine-readable structures that often get pulled directly into search results.

What this means for your content strategy

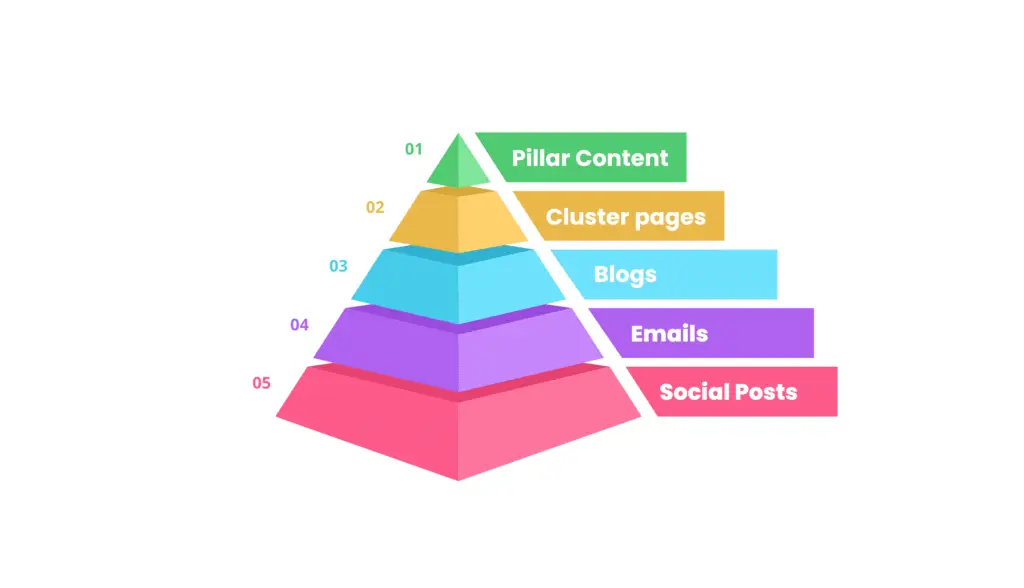

Single pages fighting for rankings are yesterday’s strategy. Today’s approach is all about building subject authority through interconnected content clusters.

We call this the pyramid of content.

Your main ‘pillar’ page sits at the peak. Let’s say it covers ‘digital marketing strategy’ comprehensively.

The next level down features several related ‘cluster’ pages. These take a deep dive into specific aspects of digital marketing strategy, such as SEO, content marketing, paid advertising, email campaigns, etc. Each of these links back to the main pillar page.

Below that, the content is broken down further, into either blogs related to the sub-topics, or smaller chunks you share via your other marketing channels – email, social media, paid ads and external websites.

These all link back to your cluster pages, which, in turn, link back to the main pillar.

This architecture does two crucial things.

First, it demonstrates your subject expertise to the search engines. You’re not just talking about concepts; you’re exploring them thoroughly.

Second, it creates semantic relationships that the algorithms can map and understand. However, for this to happen, the internal linking between these pages on your website needs to make semantic sense. For example, by linking ‘content marketing’ to ‘SEO strategy’.

Don’t force links between unconnected topics just to spread link juice. Google’s too smart for that now.

Optimising for rich results

Featured Snippets used to be the holy grail of visibility in Google. Position zero. Above even the first organic result. But AI Overviews are fast taking that mantle.

Instead of pulling one perfect paragraph, Google’s AI now synthesises comprehensive answers from multiple sources. The prize isn’t just getting to position zero anymore. It’s being one of the trusted sources that AI Overviews quotes.

Getting cited in AI Overviews requires different tactics. You should structure content for extraction, rather than providing direct answers. Make your key points clear and quotable. Use headers that signal your expertise, and back any definitive statements with data.

But don’t abandon optimising for Featured Snippets entirely. They’re still relevant and are still an essential aspect of Google search results. The basics haven’t disappeared.

You should still use direct questions as headers, followed by concise answers. Lists for processes and tables for comparisons still help both Featured Snippets and AI Overviews extract your information.

And schema markup is even more critical. It’s like leaving breadcrumbs for Google’s AI.

Without it, AI might miss your expertise entirely. With it, you’re explicitly telling the machines what information you’re serving up. And if they understand your content, they’re more likely to cite it.

Your next steps to take

The theory’s great, but you need to actually do something with it if you want to create content that the bots understand and humans love.

Start with search intent. Understanding what people are trying to achieve or learn when they search is crucial. It allows you to build content that comprehensively addresses that intent, naturally incorporating related entities and concepts.

Map your content architecture before writing. Identify your pillar topics and their supporting cluster pages. Plan the semantic connections between them.

Write naturally, but strategically. Use varied vocabulary that covers your topic thoroughly. Include synonyms, related terms and contextual language that humans would expect.

And format it for both audiences. Content that’s structured to help the machines parse it will invariably help humans scan it, too.

Finally, test and refine your content using tools that analyse semantic coverage, such as MarketMuse or Text Optimizer. But don’t let these tools drive your writing. Let them validate that your human-first content also speaks fluent robot.

Signals that matter in a bot-first world

Part 4: Earning algorithmic trust

Google doesn’t trust you. Not personally, anyway.

Its entire business model depends on serving trustworthy results. If it feeds its users rubbish information, they’ll switch to another search engine.

So, from the algorithm’s perspective, your content starts with zero credibility and must earn every point.

Google’s algorithms obsess over trust signals. Especially for content that could impact someone’s health, wealth or happiness.

Google calls these ‘your money or your life’ (YMYL) topics. That’s because people can suffer real consequences if it serves up search results that get medical advice, financial guidance or legal information wrong.

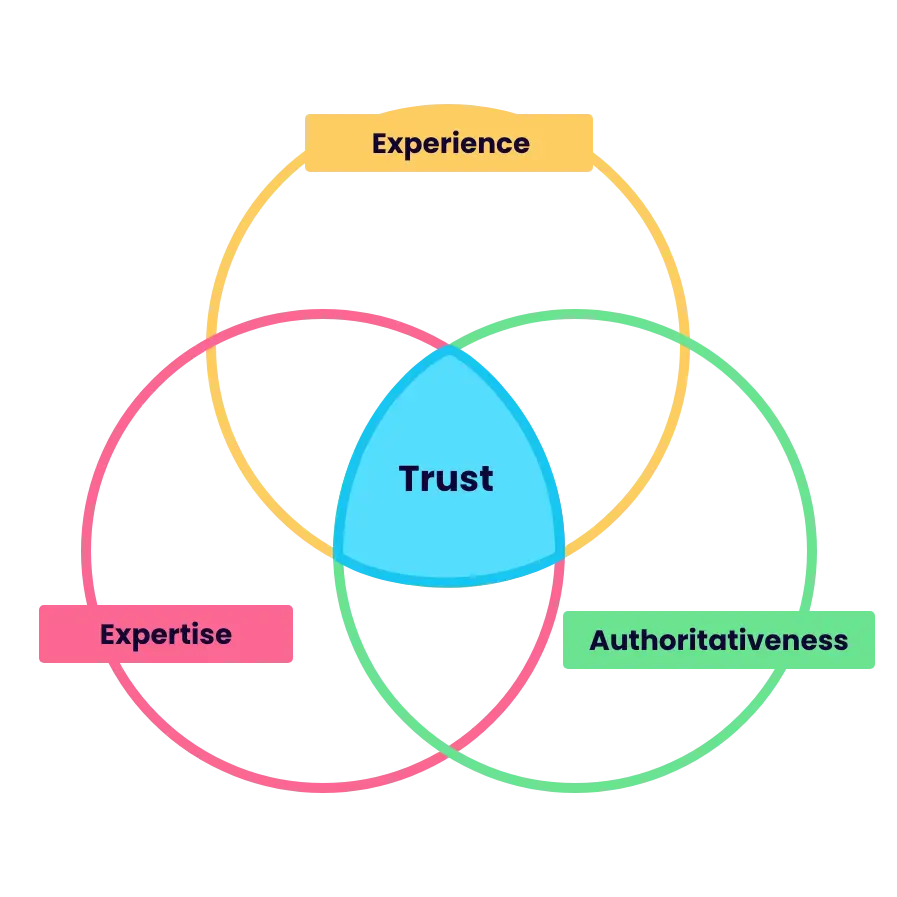

Understanding E-E-A-T

E-E-A-T is Google’s framework for evaluating the credibility of your content. It stands for Experience, Expertise, Authoritativeness and Trustworthiness. While E-E-A-T isn’t a ranking factor itself, it’s the framework Google’s human content checkers use to guide hundreds of actual ranking factors.

Experience asks, has the author actually done this? First-hand knowledge beats second-hand research every time. A plumber writing about fixing boilers carries more weight than a content writer Googling facts about plumbing.

Expertise is about credentials and demonstrable knowledge. Your About page, author bios and professional history all feed into this evaluation. Content from an anonymous source starts with a trust deficit.

Authoritativeness is about your standing in your industry, network or community. It stretches far beyond your website, to look at who else considers you an authority. Media mentions, quality backlinks and industry citations all help build digital proof that others vouch for your credibility.

And trustworthiness ties it all together. Accurate information, transparent sourcing and clear corrections when you get things wrong. Plus, the technical basics – secure hosting, privacy policies and genuine contact information.

The credibility signals that the robots check

Google wants to know who’s behind the content on your website. Real names. Real faces. Real credentials. You might not realise it, but that generic ‘admin’ byline on all your blog posts could be killing your website’s credibility. Your author profiles should include their professional background, relevant qualifications and links to other authoritative work. LinkedIn profiles, professional associations or memberships, work published elsewhere. All these connections build algorithmic confidence.

Backlinks still matter, but quality trumps quantity. One link from a trusted, high-authority website beats a thousand mentions on random, low-quality blogs.

Your website’s internal trust signals matter, too. Broken links, outdated information and technical errors can all erode your website’s credibility and harm your site’s trust score.

Building technical trust

HTTPS, mobile responsiveness, page load speed and core web vitals all help build trust in your website.

Think about it from Google’s perspective. Would a trustworthy financial adviser’s site have security warnings? Would a credible healthcare provider’s site crash on mobile? Would an authoritative legal resource take 30 seconds to load?

The technical elements of your website signal your professional competence to the algorithms. They demonstrate that you invest in, and value, user experience and security.

Building and protecting your trust score

Trust isn’t built overnight. Without it, even perfect content remains invisible.

So, start with a trust audit. Every piece of content needs clear attribution to credible authors. Build out author profiles that showcase genuine expertise. Include photos, bios and credentials that matter in your industry.

Review your backlink profile. Disavow any spammy links that could torpedo your credibility. Focus on earning mentions from respected industry sources through genuine engagement and thought leadership rather than through link-building.

Update or remove any outdated content. Nothing screams ‘untrustworthy’ like an article that presents outdated information as fact. Either update it with current information or redirect it to newer, more accurate content.

Review your website regularly. Set calendar reminders to check statistical content, update time-sensitive information and refresh anything that could mislead your readers.

Finally, invest in the technical foundations of your website. Secure hosting, clean code and fast loading times all signal to the algorithms that you’re a professional operation worth trusting.

Marketing to robots and humans

Part 5: futureproofing your strategy

The old rules have changed again. While you were mastering traditional SEO, AI broke the internet. Tools like ChatGPT, Gemini and CoPilot are the new gatekeepers.

Soon, many searches won’t even direct users to websites. AI will answer them directly, pulling from the content it deems authoritative. So, your challenge is being the source that the AI quotes.

Forget choosing between robots and humans. You need both.

Bots get you discovered. Humans drive conversions. If you optimise for one but fail at the other, you’ll get traffic that doesn’t convert. Or worse, no traffic at all.

It isn’t about gaming two systems. It’s about creating content so good that the bots and humans love it.

Optimising for AI Overviews

As we mentioned earlier, Google’s AI doesn’t browse websites. It synthesises answers from multiple sources and presents them as AI Overviews. So, your content needs to be quotable, extractable and easy to summarise. That means:

- Clear topic sentences that state key points upfront

- Concise paragraphs that make sense in isolation

- Logical flow that machines can follow and extract from

- Factual statements backed by data and sources

Optimising for voice search

Voice search has flipped traditional SEO on its head. Nobody speaks in keywords anymore. They ask complete questions.

‘Indian takeaway Nottingham’ isn’t how humans talk. ‘Where’s the best Indian takeaway in Nottingham?’ is. Your content needs to match this shift from search terms to conversations.

So, structure your pages to answer real questions rather than SEO-optimised keywords masquerading as questions. Think about the queries your customers might voice into their phones while walking down the street. FAQs are a good way to present these answers. They mirror how people naturally seek information.

Optimising for multimodal search

Multimodal search combines text, voice, images and video into a single query. And it’s exploding.

Search isn’t just text-based anymore. People point their phone at a product and ask, ‘where can I buy this?’. They upload screenshots to find similar items. They speak questions while showing images to AI assistants.

Google Lens processes billions of visual searches monthly. Most social media platforms have features that turn every image or video into a potential search query.

All this means your visual content isn’t just for decoration anymore. It’s your very own discovery channel.

Every image on your site needs machine-readable context, including:

- Descriptive file names (not IMG_1234.jpg)

- Comprehensive alt text that describes content and context

- Surrounding text that reinforces the meaning of the image

- Schema markup for product images

Your videos need the same attention. Auto-generated captions often miss the nuance and technical terms that give your videos authority. Proper, human-generated transcripts give the search engines in-depth information to index.

If you ignore visual optimisation, you’re making your content invisible to anyone using their camera as a search tool. And that’s not good.

Optimising for rich results

While using AI to create content at scale sounds efficient, it means the internet is fast becoming an echo chamber of regurgitated generic content. It’s a trap many businesses are falling into.

Google’s already fighting back. Its last few core algorithm updates have specifically targeted AI-generated content farms. The message is clear: AI-assisted content is OK. AI-only content is dead on arrival.

AI can still have a place in your content strategy. But it shouldn’t be the only thing you rely on. Your content still needs the human touch to provide nuance and context, and to ensure it’s accurate and aligns with your brand voice. So, use AI for:

- Initial research and generating ideas

- Data analysis and spotting trends

- Outlines and first drafts

- Spelling, grammar and clarit

But always add original insights and expertise, real-world examples and experience, and human voice and perspective. Also, always check and verify any facts before publishing. If you don’t, the robots will, and you might be penalised, which could tank your website.

Your next steps

Futureproofing your content for AI-powered search starts with an audit of your content’s structure. Can AI easily extract the key information? You can test this by asking ChatGPT to summarise your pages. If it struggles, so will AI Overviews.

Next, develop more question-focused content. Map your most common customer questions to comprehensive answers on your website and build content that directly addresses voice search queries.

Treat your website visuals as content, not as decoration. Every image, video and audio file should be discoverable, so make sure they’re optimised properly.

And if you’re using AI tools to create new content, always maintain human oversight for quality and originality. Also, track when AI Overviews references your content. Today’s mentions are tomorrow’s backlinks.

The key takeaway here is that the future isn’t human versus robot.

It’s human with robot.

Master both, and visibility will follow.

Ignore either, and you’ll be invisible to everyone.

Ready to stop being invisible?

You’ve read this article. You’ve got the framework. You now know how the robots see your content.

But knowing and doing are different things. Most businesses read guides like this, nod along, but change nothing.

The result?

Their content stays invisible. Their competitors keep winning. The gap keeps growing.

Don’t be most businesses. Take action today.

We’ve created a practical tool to turn this knowledge into action. Our free Visibility-First Strategy Audit contains 15 critical questions to help you diagnose exactly where the algorithms are blocking your website’s success. It walks you through:

- Technical barriers killing your visibility

- Content structure issues confusing the search engines

- Semantic gaps limiting your reach

- Trust signals you’re missing

- Optimisation checks for AI-driven search

Stop guessing what the algorithms want. Start knowing exactly where you stand and what to fix next.

Make the robots work with you, not against you. Before your competitors do.

Paul Dyer

Paul is a director of QBD and our in-house SEO guru. He has more than 25 years of experience with search engines, was around when Google launched and has tracked them ever since. His expertise in digital marketing has enabled him to grow several businesses in the UK to multi-million-pound turnover, with full trade exits. He now spends his time helping other companies navigate the digital landscape and implement effective growth strategies.

Jon Smart

Jon is our Head of Creative Content. He works with a range of QBD clients, producing engaging, SEO-friendly website and digital content to help them reach a wider audience. He does this by gaining a deep understanding of who our clients are, what they do, who their customers are and what makes them special, then helps them to tell their brand story in a way that connects with their target audiences.